Introduction

This lab built off the work of Lab 9, where we used the robot to map out a room's walls, and Lab 10, where we simulated Bayes filtering to localize a virtual robot in a room.

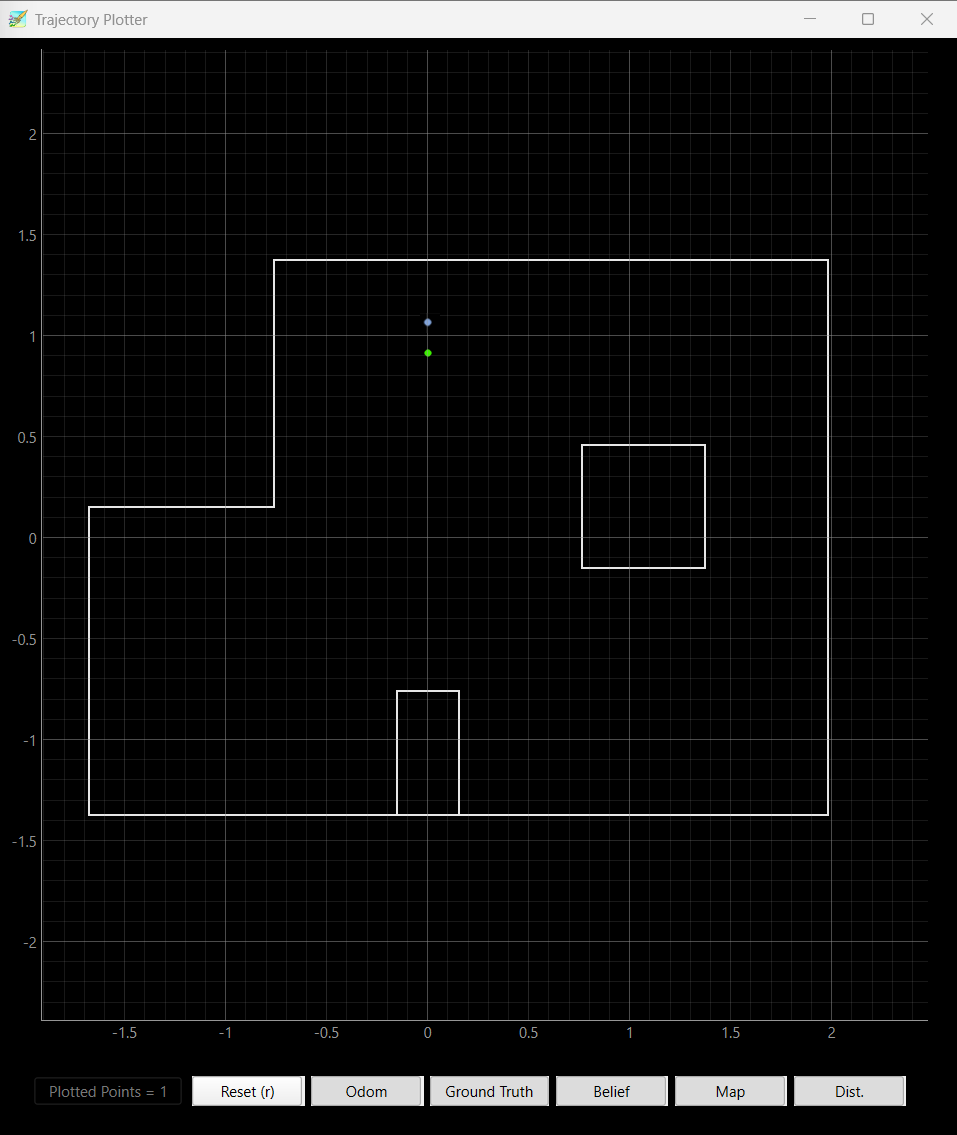

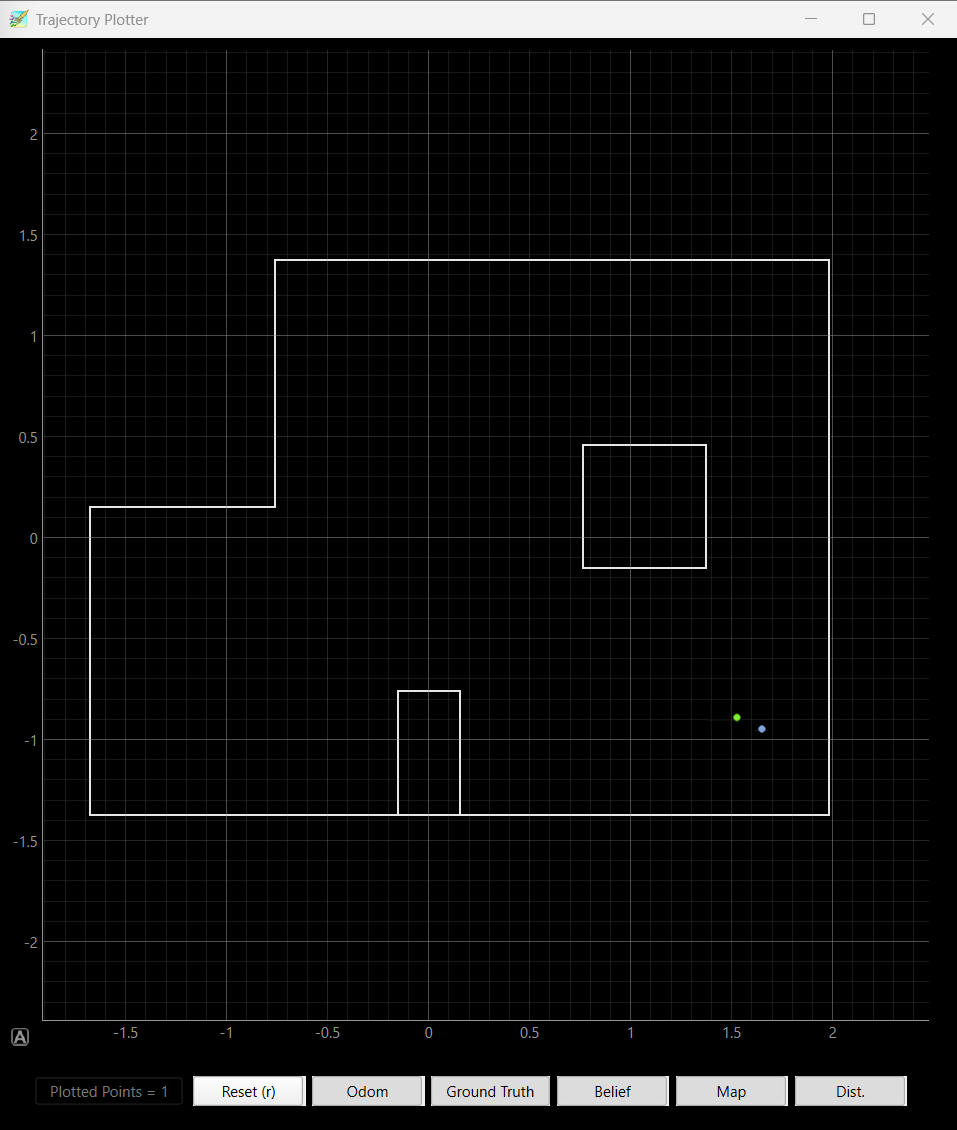

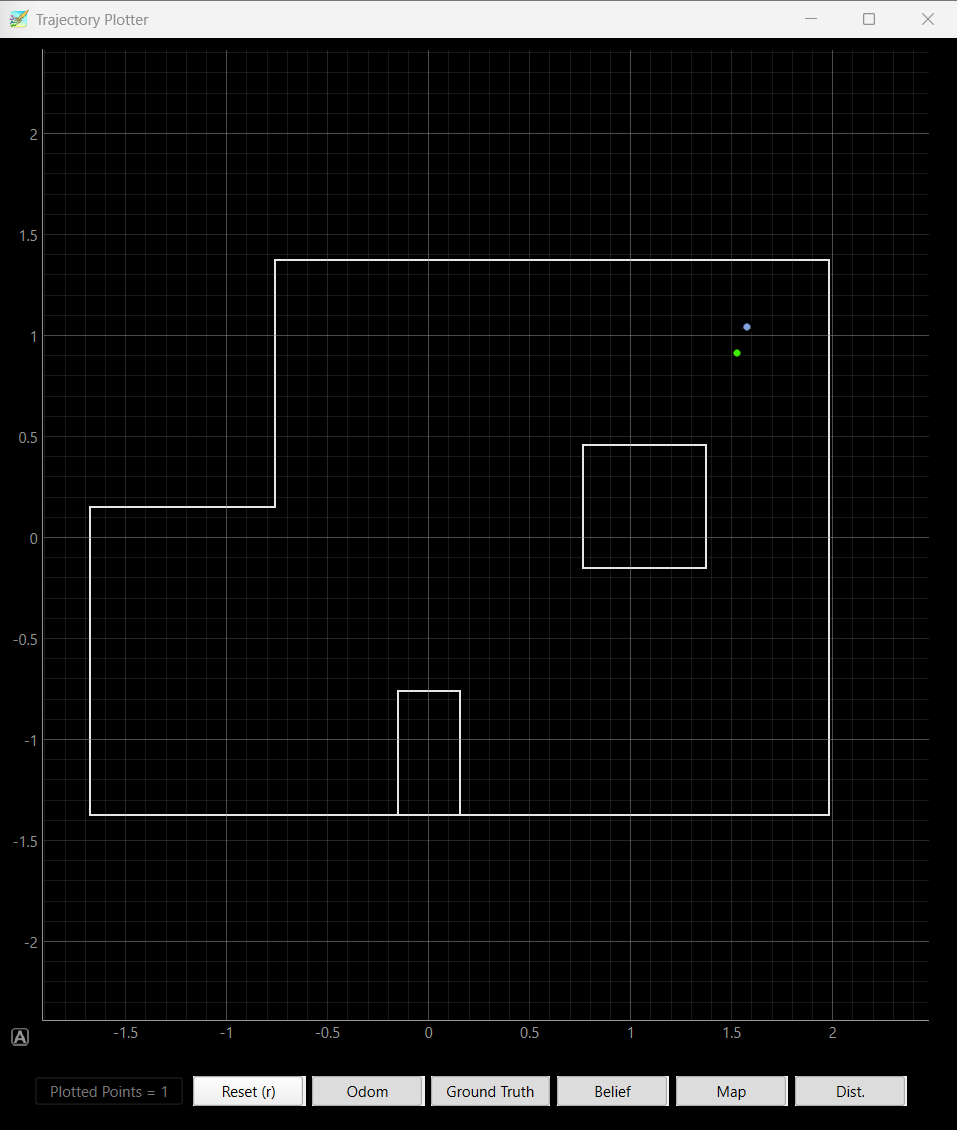

Testing Localization in Simulation

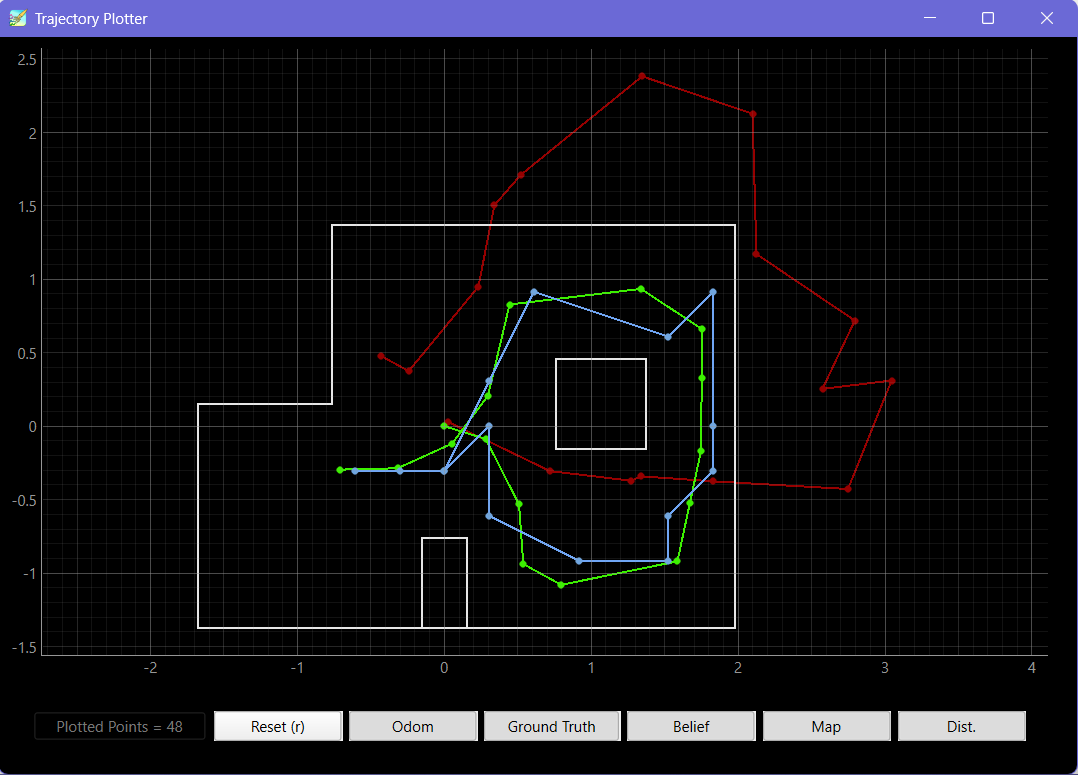

To verify that my Lab 11 setup works, I ran the Bayes filtering and localization simulator and checked that the results made sense. The robot ran through as expected, with the belief (blue) following the ground truth (green) and the odometry result (red) drifting off over time.

Localization in Real Life

In the simulation, we had access to a ground truth that could to compare against the robot's localization. This lab doesn't have access to that ground truth, since we can't manually tell the robot where it is in the room. Instead, we need to give the robot a ground truth by placing it in known locations around the room, and running a single localization step at each spot.

In this lab, I'll run localization at four locations in the room:

(-3 ft, -2 ft, 0 deg)

(0 ft, 3 ft, 0 deg)

(5 ft, -3 ft, 0 deg)

(5 ft, 3 ft, 0 deg)

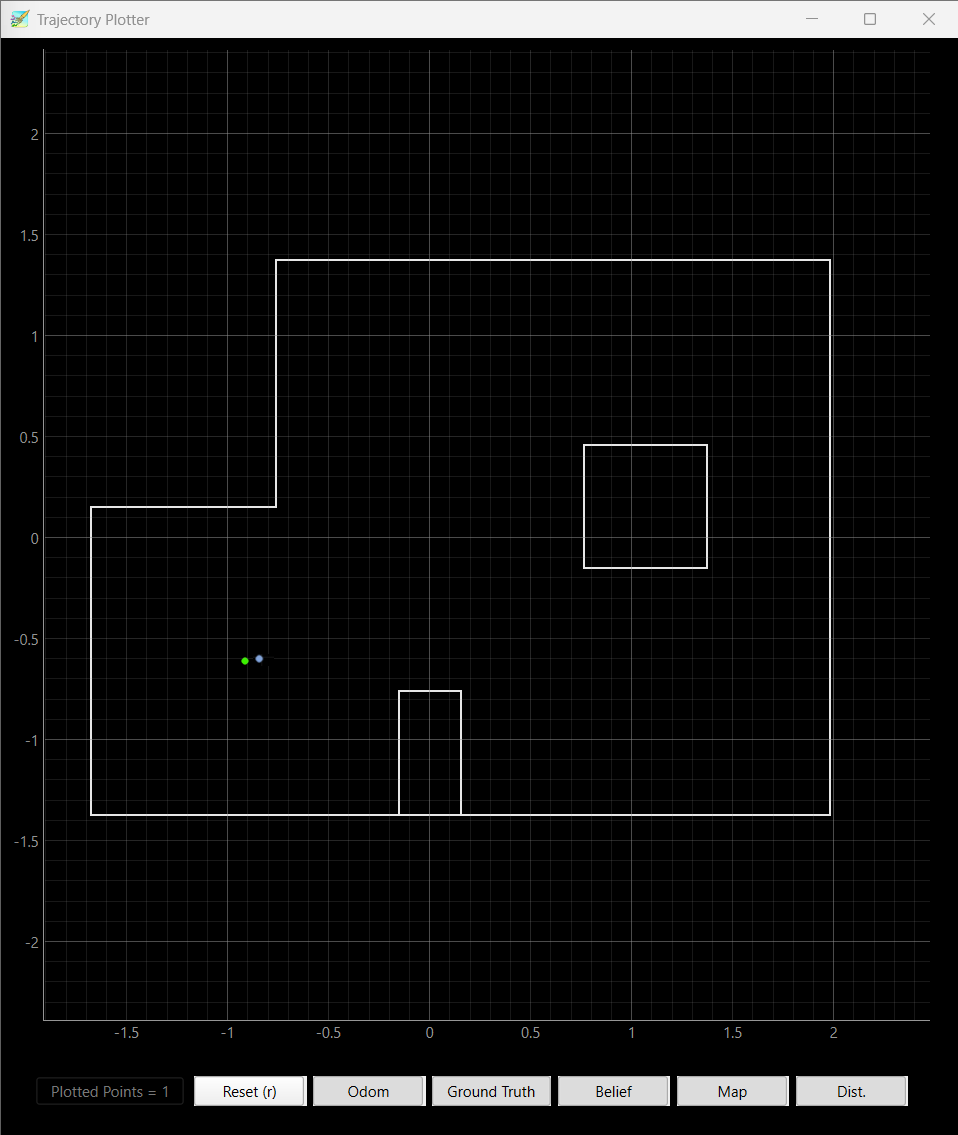

After running a localization step at each of these locations, the estimated position will be marked in blue on the "Trajectory Plotter" provided by the course staff. We will then compare the estimated position to the ground truth position in green.

Implementation

The only thing that the robot is doing here is rotating and collecting data. The actual localization is done on my PC, so I need to set up data collection so that I can do the compute in my python notebooks.

perform_observation_loop()

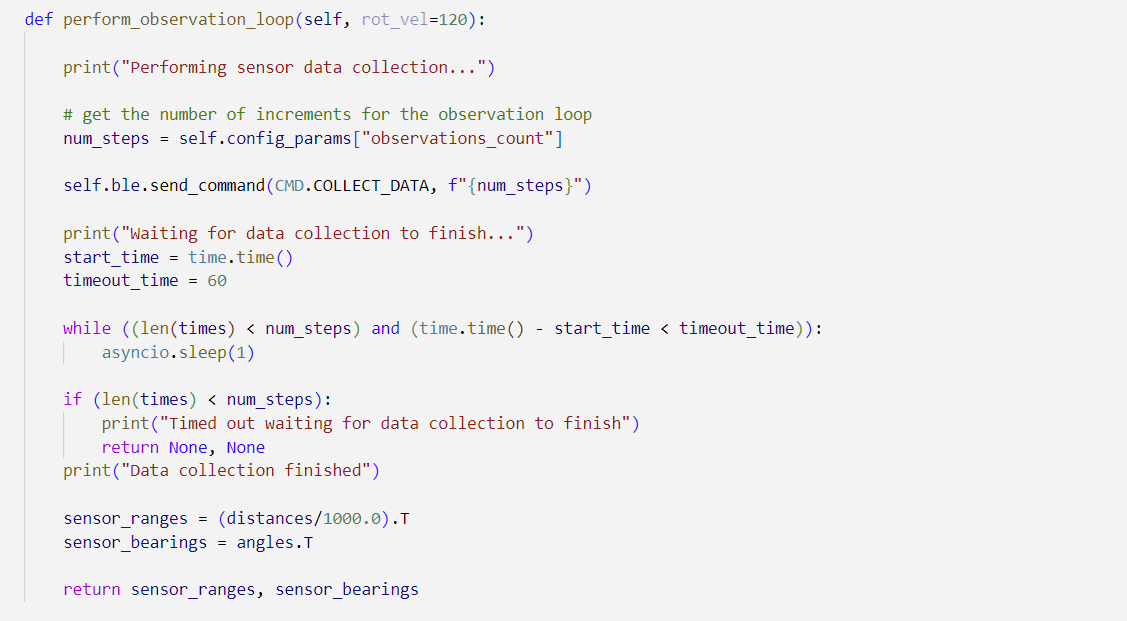

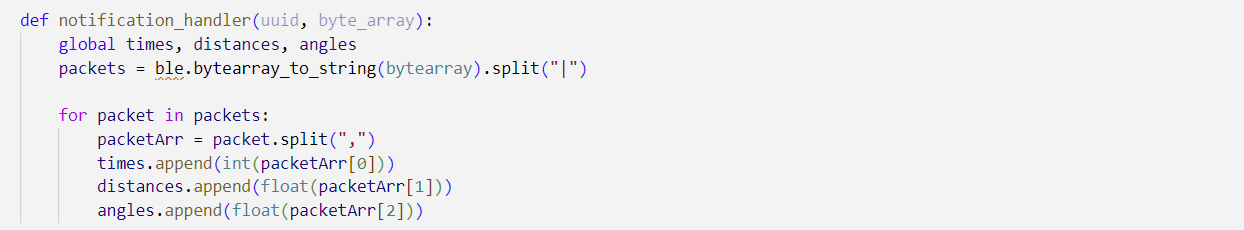

To collect the data, I wrote perform_observation_loop(), which would call COLLECT_DATA on the robot, which would trigger it to rotate 360 degrees and collect 18 data points (20 degrees apart) from the ToF sensors, and send that data back to my PC.

The method relies on three numpy arrays: times, distances, and angles. These arrays are populated by the notification handler which parses the collected data from the robot:

Together, these three arrays report the data required by a single localization step on the robot.

Localization results

Here are the results of running a single localization step at each position:

Localization results

Position (-3, -2)

Overall localization worked exceedingly well, and out of all four points, I think this one was the easiest to localize. This is because the position (-3, -2) is equidistant from the far, near, and left walls, so any sensor bias cancels out. This is reflected in the result, as the green belief dot is centered in the section of the room.

Position (0, 3)

I think this point was the most succeptible to sensor bias, especially because the ToF sensor has a tendency to underestimate the distance to the wall when the wall is close. I showed this all the way back in Lab 3, and this bias is affecting my belief by pushing it closer to the top wall than it should be. Overall though, localization worked fairly well.

Position (5, -3)

This position was the best out of the few times that I tried localizing, but it was off the green dot the other times I tried collecting data. I think this is because the features the robot was detecting were a little more complex this time, with the hallway above and the open space to the upper left. Errors in angle estimation would also affect the localization result significantly, which is why I think this position was so variable.

Position (5, 3)

Similar to the last position, this position also had some variability, although this one wasn't as bad. I think I did 4 runs of localization at this position, and the robot localized correctly twice. The features here aren't as complex as (5, -3), and it's also closer to stuff, which means angle doesn't matter as much, which is why this result was more consistent.

Conclusion

I think in my specific case, I used a PD controller to rotate my robot to angle setpoints, but there was a lot of hysteresis in my robot. Sometimes it would overshoot its position and come back, and sometimes it wouldn't. Depending on whether or not the robot overshot or not, it would approach the setpoint from two different sides, and this would affect whether the robot's final angle for that sample was an overshoot or undershoot. I could trust that my ToF sensors were accurate, but my mapping data was still a little noisy. I'm pretty sure this was because of my ability to track angle setpoints with the PD controller, and if I built a better controller, I think I would be able to get more consistent localization results. Overall though, I'm really happy with how well some of my samples turned out, and I'm looking forward to putting all of this control together in Lab 12.